Understanding Artificial Intelligence: What counts as AI?

Posted: July 19, 2024

What is the difference between AI and Machine Learning?

Traditionally, artificial intelligence—AI—has referred to any kind of technology that attempts to emulate human intelligence. Machine learning algorithms are one kind of computing that seem to fit the bill. Machine learning is itself a general term for a variety of computational techniques that can improve their own performance—or “learn”—in response to input, usually using statistical calculations.

Though machine learning algorithms have been around for at least sixty years, their performance has become more impressive recently because they have more data to learn from and more powerful processors on which to run. Because of machine learning’s recent success in a variety of domains—such as text and image generation, computer vision, voice recognition, industrial anomaly detection and predictive analytics—many people now use the term “AI” synonymously with “machine learning.” Even neural networks are a kind of machine learning and deep learning is a kind of neural network.

Our Industrial Life

Get your bi-weekly newsletter sharing fresh perspectives on complicated issues, new technology, and open questions shaping our industrial world.

Types of machine learning

Machine learning techniques generally fall into three categories corresponding to the different ways they learn to process data. Many AI systems, such as ChatGPT, use some combination of one or all of these techniques:

1. Supervised learning takes in data and classifies it into different categories. For example, your email client probably looks at every email you receive and classifies whether or not it’s spam. It may well rely on a supervised learning AI that was initially fed large numbers of emails that human supervisors had labeled as either spam or not-spam. With enough of this supervised training, the AI can look at an email that it’s never seen before and classify it as spam or not.

Supervised learning can also predict where an input falls in a continuum: human supervisors can feed AI lots of data about house prices, their locations, size and more and the AI will learn to predict what price a house will sell for given its location, square footage and other information.

Within industry, supervised learning can use these abilities to conduct predictive maintenance. Human supervisors can show the AI numerous examples of sensor data from assets that were in need of maintenance and data from assets that were operating optimally. After training, the AI can look at real-time sensor data from an asset and predict how long it will be before it needs maintenance.

2. Unsupervised learning discovers patterns in data that humans might not notice. For example, an unsupervised AI could look at millions of X-rays and discover patterns in the pixels of some X-rays that deviate from the rest. Those deviations could indicate that the X-ray patient has a tumor or some other health problem. Similarly, unsupervised AI can detect anomalies in industrial assets. It can look at thousands of hours of sensor data to discover subtle variations a human might not be able to detect. In this case, the deviations might indicate that something is wrong with the asset.

3. Reinforcement learning emulates the way psychologists used to train rats to run through mazes: every time the rat completes the maze successfully, it gets a rewarding snack—so eventually it learns to run the maze correctly every time. Programmers can use reinforcement learning to teach an AI games such as chess. The AI plays thousands of games, getting a reward signal when it wins, but not if it loses. Eventually, it’s able to learn which moves are most likely to result in a win.

The same principle that lets AI win games of chess also lets it figure out the best moves for optimizing operational efficiency. An AI could get increasing reward signals every time it minimizes costs and downtime while maximizing output and/or throughput. Eventually, the model will learn just what precise combination of machine settings and environmental factors will result in an optimized production schedule.

What are transformer models?

Transformer models are a relatively new form of unsupervised machine learning that have revolutionized the performance of large language models (LLMs) and other forms of generative AI since they were invented in 2017. The “GPT” in Open AI’s chatbot, ChatGPT, stands for “generative pre-trained transformer” because it uses a transformer model to generate new text after being pre-trained on enormous quantities of text to recognize statistical patterns in how words combine.

Getting computers to generate language has always been difficult because the word that comes next in a sentence often depends not just on the word that comes before it, but on words five words back or in another sentence entirely. For example, in the sentence, “Paul waved to John when he saw him,” “him” could refer to John or Paul. But in the sentence, “John saw him,” “him” can’t refer to John—though it could refer to Paul! If you need a word that refers to John, you need to use “himself.” It’s hard to program a computer to distinguish the two cases.

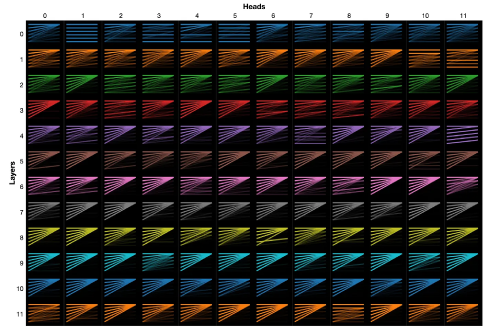

Transformer models can learn these contextual differences because they assign each word in a sentence numbers indicating how important every other word in a sequence is to it. Transformer models predict more accurately whether “him” or “himself” is more likely to follow “John saw…” in any given passage because they encode how important each of the other words in the passage are to the next word.

To get a thorough explanation of how transformers work that’s non-technical and understandable to non-experts, check out the philosopher Ben Levinstein’s guide to transformers.

This ability to encode how one item in a sequence contextually depends on others helps computers do more than just generate sentences. For example, the AlphaFold AI uses transformer technology to predict the 3D structure of proteins based on the sequence of amino acids that compose them. You can imagine how transformer technology could optimize supply chains, manufacturing processes, or any other complex industrial process in which each step in a sequence depends on multiple other steps.

There is no doubt that various types of AI models will continue to help operations managers and engineers across the industrial sector improve and refine their operations and supply chains with increasing levels of sophistication—as they have been doing for decades. And new applications will undoubtedly change how industrial workers train for and engage with their day-to-day tasks. But, whether these machine learning techniques will ever reach Artificial General Intelligence or the so-called AI Singularity remains an open question.